This post describes a project I’ve been working on during Christmas this year. It is a project that’s absolutely not for the inexperienced, so if you decide to take any of the ideas in this post – which you’re absolutely welcome to do – I won’t be able to assist.

This is Part 1 of the post. Part 2 hasn’t been published yet (I’ll edit that when I get around to part 2). The scripts referred to here and all the other code will be made available on GitHub when Part 2 is published.

The problems:

Ubiquity or Functionality?

There are loads of amateur radio logging programs out there, and over the years I’ve tried many of them. Most suffer from a common problem, however: because our radio equipment is increasingly used under computer control, we opt to keep our logs on our shack computer, which can have soundcards and serial ports connected to the radios.That understandable quest for functionality in the logging software has a disadvantage, however: if we operate a portable station at different locations, we either have to take our logging computer with us, or else go through some routine of remembering to import and consolidate the logs in a single place later.

Other logging programs, such as 2M0SQL’s Cloudlog, take the alternative approach of being an online, hosted log. You can enter your contacts from anywhere, but that comes at the cost of not being able to connect the logging computer to your radio – for sound security reasons, web browsers can’t access serial interfaces connected to your computer.

Today, there are hacks which try to resolve this disadvantage. M0VFC’s CloudlogCAT program, for example, essentially gives Cloudlog some ability to talk to the radio by proxying commands through a local client computer. In the future, WebUSB should allow web browsers to talk to connected devices in a secure manner, but that will require new chips in our radios, and I can’t see the manufacturers adding support any time soon.

Backups and security

Having our logs stored on a local logging machine has a substantial disadvantage: we are responsible for our own backups and keeping our logs safe. We know this isn’t happening. All too often, we hear of people who have lost their logs in a hard-drive or virus incident, with the resulting impact on other people who need those log records. One of the advantages of a system hosted online, by someone else, is that they can maintain backups.

And other problems…

I’ve ranted before about how I don’t believe amateur radio log programs generally don’t have the right data model: when I go on a DXpedition somewhere, even just making a handful of contacts from a SOTA summit, those QSOs should be grouped together in my log as they share common information like locator square, IOTA/SOTA reference, etc. This means we need the concept of ‘activation’ in the log, as a parent of the child QSOs. This becomes especially critical if I’m uploading the QSOs to Logbook of the World, for example, as I want all the QSOs to be signed with the right grid square, which may not apply to the rest of the QSOs in the log. I’m amazed that too many logs still haven’t got this concept right. As we increasingly rely on automatic methods for uploading and signing QSO information and then claiming awards, there’s massive scope for errors if people are just signing data with a default certificate configuration because that’s all their program allows.

Enter Log4OM

I like to try different logging programs on a regular basis as its a fast-moving field in terms of functionality. About a year ago, I re-discovered Log4OM and decided to stick with it. Whilst it isn’t perfect (it doesn’t support the concept of activation described above, for example, and being desktop software it doesn’t allow ubiquitous logging), it has most of the features I require and good QSL management tools – critical for me as I deal with a lot of DXpedition QSOs each year.

At a technical level, Log4OM runs using a SQLite database by default – a sensible choice as it allows for easy first-time installation that ‘just works’. For those of us who know what we are doing, however, we can opt to switch to a MySQL database instead. MySQL is a database server program that’s designed to be used in a client/server architecture and is a very mature product – it is behind a lot of the world’s websites.

For the last year, I’ve been using a local MySQL server with my Log4OM installation (thereby continuing to sit on the ‘functionality’ side of the ‘functionality/ubiquity balance’ I described above. Then it occurred to me a couple months ago that there was no need to do this…

To the cloud! (AWS EC2, to be precise)

As I said, MySQL is designed for use in a server/client architecture, so just running the database locally on my computer means I wasn’t taking full advantage of its abilities. A few weeks ago, I spun up a T2-Nano Ubuntu Linux machine in AWS EC2, part of Amazon’s cloud-computing infrastructure, and installed MySQL on it. It was then just a matter of uploading the data from my local MySQL server to the one in EC2 and then changing the Log4OM profile for each of my logs to reflect the new login details. Since Log4OM doesn’t support MySQL connections over SSH, I also created a different user with restricted permissions and a non-default password that I’d use for logging in from Log4OM, and removed the default users that are created by Log4OM when new databases are created.

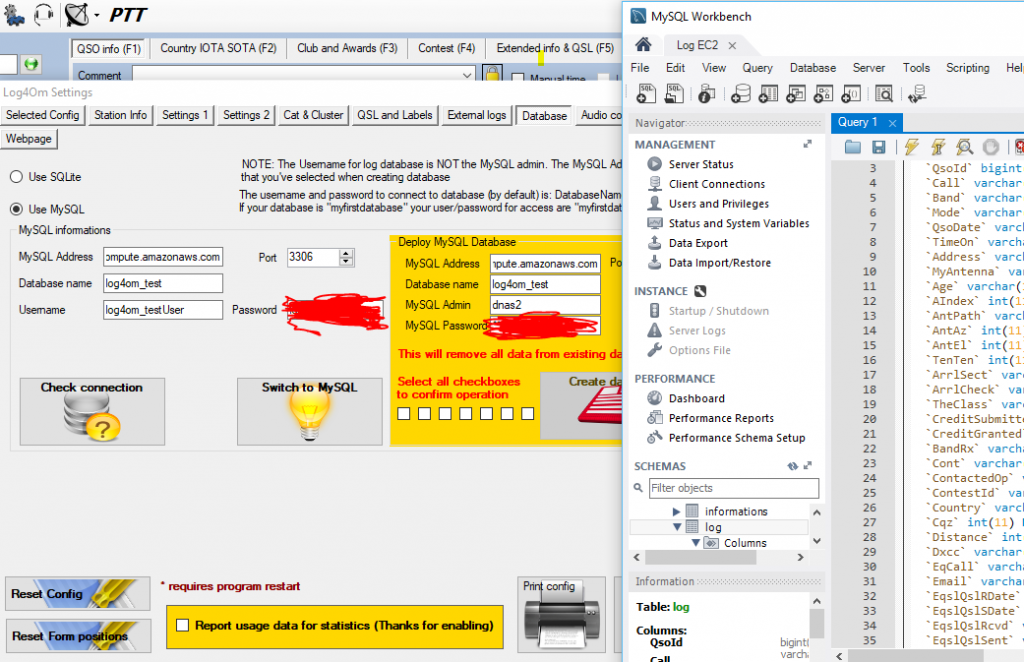

Testing connection to EC2 from Log4OM settings

Sure enough, I was soon connected to the database in the cloud from Log4OM:

Connected

(For the benefit of anyone else trying this, I also found a bug in Log4OM’s MySQL support, and an associated workaround, in the course of moving to a new MySQL installation.)

To summarise where we’re up to before I go any further:

- I’ve now got Log4OM on my local desktop computer, acting as desktop software with CAT and soundcard connections to my K3, but using a database hosted by Amazon.

This resolves the ubiquity / functionality conundrum: I have full functionality from my logging software but the flexibility to connect to a single, always-on, database from any computer anywhere with an internet connection, as log as it has Log4OM installed.

A note about performance

Connecting Log4OM to a hosted database in the cloud doesn’t come at nil performance cost. Everything, particularly some of the batch operations such as marking several QSLs as sent, takes a few more seconds than you’ll be used to on a local server. As you might expect, this is worst with very large logs (tens of thousands of QSOs), but I haven’t found it to be so annoying that this slowdown would outweight the other benefits.

Handling backups

As I mentioned, one of my concerns was backups and data security: I wanted to not have to bother about such issues in a hosted world. Had I gone for a database on the AWS RDS product, this would indeed have been handled by Amazon for me, but because I had gone for EC2 (for both cost and extensibility reasons), I would have to handle backups.

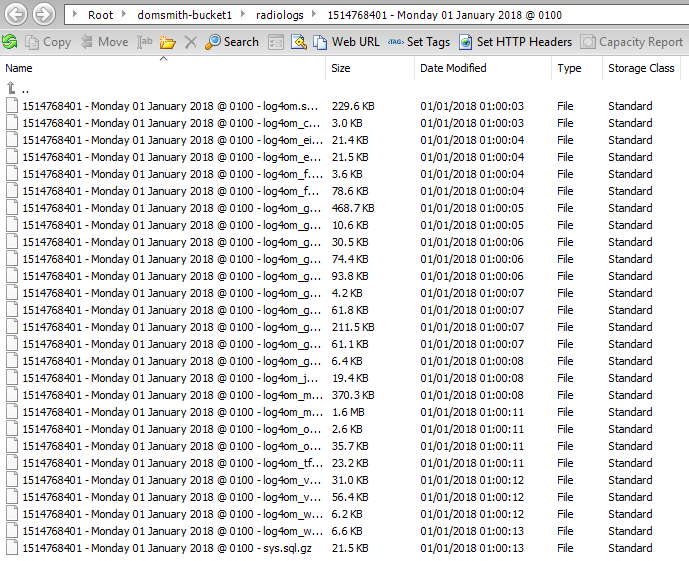

In a sense backups are even more critical on EC2 than on home computers, as EC2 storage is ephemeral: if the server is switched off all its data is lost and has to be recreated.

The first stage was to write a script to ship gzip’d copies of all the MySQL database tables to an S3 bucket every month. I also then wrote a script to store weekly incremental ADIFs of contacts since the start of the month, which is then emailed to me from the server. This means that a maximum data loss I should be exposed to at any time is 7 days (although Log4OM is set to upload all QSOs immediately to Clublog, so I could even recover the last 7 days if I needed to).

Backups stored in an S3 bucket

Contest logs

For reasons that will become clear in Part 2 of this post, I also wanted to make ADIF backups of my various contest logs available separately, as I’m a great believer in making contest logs public for transparency. Having installed lighttpd as a web server on the EC2 instance alongside the MySQL database, a script checks for any contest entries the previous weekend each Monday and creates ADIFs for each contest. These are then exposed over HTTP (but not necessarily published on a website). There’ll be more on this in Part 2!

As with all the other scheduled scripts that I mention in this post, I’ve also got a ‘rebuild’ version of the script for restoring old data when I lose the ephemeral storage.

With that, we have two of the three problems resolved: I have a good balance between ubiquity and functionality, and backups are being handled. Now onto the third…

SOTA Logger

One of the use-cases where I find Log4OM to be weaker is logging SOTA activations. Whilst it’s great that it supports SOTA at all – many logging programs don’t – entering QSOs in the Log4OM UI is a bit complex. This is because the SOTA reference has to be entered on another tab and, while the database schema allows for different values for the ‘MyGridReference’ field per QSO, there’s nowhere in the UI to enter your grid reference other than the default for the station.

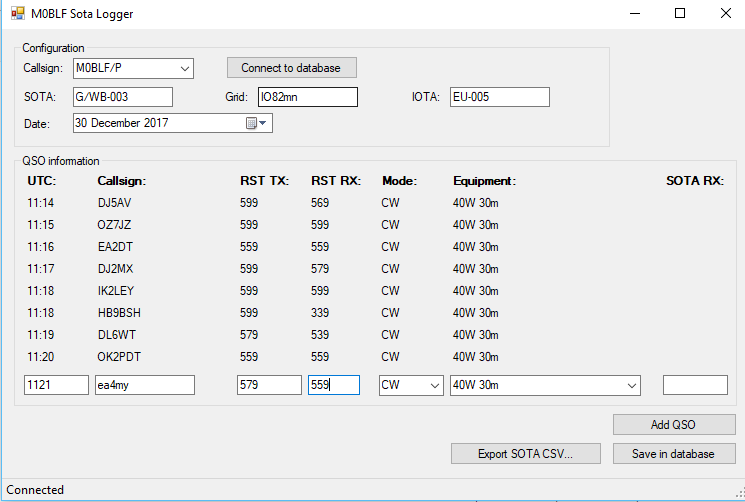

Since my database is in the cloud, it is now divorced from Log4OM itself. This means that, as long as I don’t alter the schema (the database structure) in a way that is incompatible with Log4OM, I don’t have to use Log4OM for logging, Anything that gets into the database by whatever means will be picked up by Log4OM. My solution to the SOTA problem was therefore to write a program called SOTA-Log4OM. This does what it suggests: it’s a very simple program for logging SOTA activations that connects to the Log4OM database and enters the QSOs with the correct information, including grid reference, etc. It also creates an activation CSV for upload to the SOTA website.

SOTA Logger

Each week on a Tuesday (to give me time to type up a log from a weekend activation), ADIF records for each SOTA activation in the previous week are created (if there are any), and exposed for download over HTTP… again, the reasons for this will become clear in Part 2 of this post!

Again, I’ll take a break to summarise my progress:

- We have the MySQL database for Log4OM running in the cloud, as an always-on instance that can be connected to from anywhere

- This allows us to keep the functionality advantages of having desktop logging software with CAT control and soundcard connected to the radio, whilst letting us log from anywhere without having to merge logs or synchronise databases

- We are backing up the logs monthly to S3, with weekly incremental backups over email

- Contest logs are also created separately and exposed over HTTP

- For logging SOTA activations, we have our own simple UI that logs QSOs into the same MySQL database as used by Log4OM

- Those SOTA logs are then exposed for download over HTTP

Signing LOTW records correctly

As described in the introduction, I have a problem with logging software that automatically signs QSOs for LoTW in that there is often no separation of QSOs by activation. When you sign a LoTW upload, you are signing with a ‘Station Location’, which is a combination of DXCC, Gridsquare and IOTA (if applicable). I rarely see logging software create new ‘Station Location’ records for different QSOs in the same log, however – Log4OM certainly doesn’t. This lack of support has stopped me from regular LoTW uploads in recent months, especially given the number of SOTA activations I’ve done with a handful of QSOs from each different QTH. I don’t want to be creating new ‘Station Locations’ myself for each QTH.

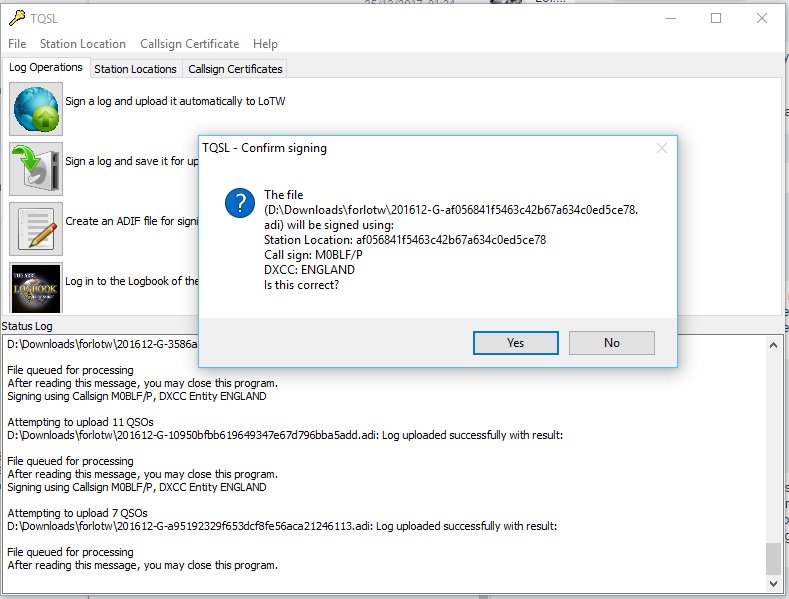

I do have all that information in my logs, though, so I can deal with this now that I have a database server where I can run scripts. On a monthly basis, for each QSO, I create a ‘placehash’: an MD5 hash of the results of concatenating my callsign with all the information needed for a ‘Station Location’ in a particular way. On a monthly basis, a zip file of ADIF files containing the previous month’s QSOs separated out by placehash is exposed at the server’s HTTP port, ready for download.

Also created monthly is a station_data file. The TrustedQSL software uses a station_data file (which is actually XML) to define the Station Locations. All I need to do is to overwrite the station_data file stored in the user’s Roaming profile in a Windows installation of TrustedQSL with the one created by the logging server, and TrustedQSL will ‘know’ about all the different station locations I have visited.

I just then need to sign the log (containing the placehash in the filename) with the Station Location for the same placehash and I can be sure that all my LoTW records are signed correctly.

Signing a LoTW record by placehash

Ideally I’d like to automate this process on the server. Although it looks like this would be possible with the tqsl command line in the Linux version, I haven’t yet found a way to install TrustedQSL without the 320MB of (mainly graphics-related) dependencies, which I’d like to avoid given that it’s being stored on an ephemeral system.

Clublog OQRS merging

Is there anything else I can solve while I’m at it? As I said, one of my biggest time-sinks is handling QSL cards for DXpeditions I’ve been on. OQRS – the ability to request a QSL card online, with postage covered over Paypal – has certainly sped up the process a lot but there’s still a manual step: each time I do cards I have to log into Clublog, download an ADIF containing the QSL requests, and merge it into the Log4OM log, so that the Print Labels option in Log4OM prints requested QSL cards.

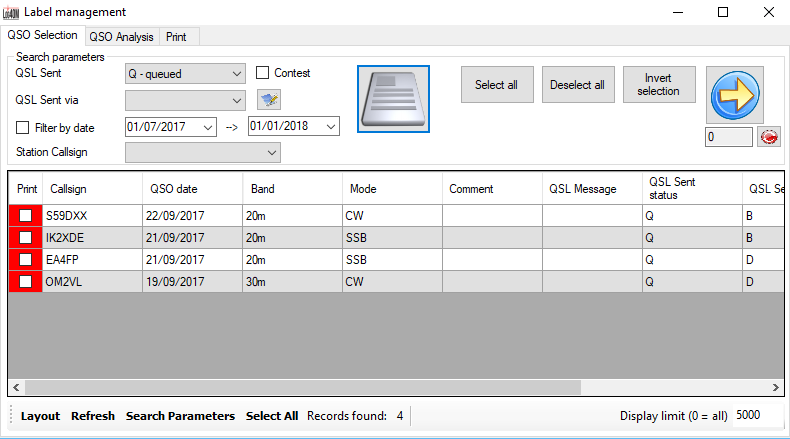

So, I wrote a script to live on the database server to download any OQRS files from Clublog on a weekly basis, and do the merge for me. This means I just need to press the Print button in Log4OM and requested card labels emerge without me doing anything else.

QSL labels ready to print

Another script also creates an ADIF file of sent QSLs each month and exposes them over HTTP. Again, see part 2 to understand why!

As you can probably tell, this is all part of a much bigger project. I’m really keen to hear feedback on where I’ve got to so far, and hopefully I’ll be able to publish Part 2 soon. There’s a lot more to come!